Stochastic Approximation and Reinforcement Learning: Hidden Theory and New Super-Fast Algorithms

6 395

25.4

Microsoft Research334 тыс

Опубликовано 20 ноября 2018, 16:07

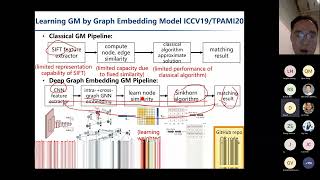

Stochastic approximation algorithms are used to approximate solutions to fixed point equations that involve expectations of functions with respect to possibly unknown distributions. Among many algorithms in machine learning, reinforcement learning algorithms such as TD- and Q-learning are two of its most famous applications.

This talk will provide an overview of stochastic approximation, with focus on optimizing the rate of convergence. Based on this general theory, the well known slow convergence of Q-learning is explained: the variance of the algorithm is typically infinite. Three new Q-learning algorithms are introduced to dramatically improve performance: (i) The Zap Q-learning algorithm that has provably optimal asymptotic variance, and resembles the Newton-Raphson method in a deterministic setting (ii) The PolSA algorithm that is based on Polyak'smomentum technique, but with a specialized matrix momentum, and (iii) The NeSA algorithm based on Nesterov's acceleration technique.

Analysis of (ii) and (iii) require entirely new analytic techniques. One approach is via coupling: conditions are established under which the parameter estimates obtained using the PolSA algorithm couple with those obtained using the Newton-Raphson based algorithm. Numerical examples confirm this behavior, and the remarkable performance of these algorithms.

See more at microsoft.com/en-us/research/v...

This talk will provide an overview of stochastic approximation, with focus on optimizing the rate of convergence. Based on this general theory, the well known slow convergence of Q-learning is explained: the variance of the algorithm is typically infinite. Three new Q-learning algorithms are introduced to dramatically improve performance: (i) The Zap Q-learning algorithm that has provably optimal asymptotic variance, and resembles the Newton-Raphson method in a deterministic setting (ii) The PolSA algorithm that is based on Polyak'smomentum technique, but with a specialized matrix momentum, and (iii) The NeSA algorithm based on Nesterov's acceleration technique.

Analysis of (ii) and (iii) require entirely new analytic techniques. One approach is via coupling: conditions are established under which the parameter estimates obtained using the PolSA algorithm couple with those obtained using the Newton-Raphson based algorithm. Numerical examples confirm this behavior, and the remarkable performance of these algorithms.

See more at microsoft.com/en-us/research/v...

Случайные видео