Microsoft Research334 тыс

Опубликовано 8 февраля 2022, 18:05

Speaker: Aadirupa Saha, Postdoctoral Researcher, Microsoft Research NYC

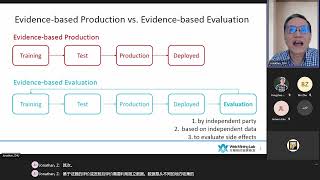

In Preference-based Reinforcement Learning (PbRL), an agent receives feedback only in terms of rank-ordered preferences over a set of selected actions, unlike the absolute reward feedback in traditional reinforcement learning. This is relevant in settings where it is difficult for the system designer to explicitly specify a reward function to achieve a desired behavior, but instead possible to elicit coarser feedback, say from an expert, about actions preferred over other actions at states. The success of the traditional reinforcement learning framework crucially hinges on the underlying agent-reward model. This, however, depends on how accurately a system designer can express an appropriate reward function, which is often a non-trivial task. The main novelty of the mobility-aware centralized reinforcement learning (MCRL) framework is the ability to learn from non-numeric, preference-based feedback that eliminates the need to handcraft numeric reward models. We will set up a formal framework for PbRL and discuss different real-world applications. Though introduced almost a decade ago, we will also discuss a problem here—that most work in PbRL has been primarily applied or experimental in nature, barring a handful of very recent ventures on the theory side. Finally, we will discuss the limitations of the existing techniques and the scope of future developments.

Learn more about the 2021 Microsoft Research Summit: Aka.ms/researchsummit

In Preference-based Reinforcement Learning (PbRL), an agent receives feedback only in terms of rank-ordered preferences over a set of selected actions, unlike the absolute reward feedback in traditional reinforcement learning. This is relevant in settings where it is difficult for the system designer to explicitly specify a reward function to achieve a desired behavior, but instead possible to elicit coarser feedback, say from an expert, about actions preferred over other actions at states. The success of the traditional reinforcement learning framework crucially hinges on the underlying agent-reward model. This, however, depends on how accurately a system designer can express an appropriate reward function, which is often a non-trivial task. The main novelty of the mobility-aware centralized reinforcement learning (MCRL) framework is the ability to learn from non-numeric, preference-based feedback that eliminates the need to handcraft numeric reward models. We will set up a formal framework for PbRL and discuss different real-world applications. Though introduced almost a decade ago, we will also discuss a problem here—that most work in PbRL has been primarily applied or experimental in nature, barring a handful of very recent ventures on the theory side. Finally, we will discuss the limitations of the existing techniques and the scope of future developments.

Learn more about the 2021 Microsoft Research Summit: Aka.ms/researchsummit

Свежие видео